In the world of data analytics, accuracy is everything. Whether you are analyzing customer behavior, fleet operations, or financial performance, decisions based on bad data can lead to costly errors. That is why data cleaning (also known as data cleansing) is a critical part of any data-driven workflow. In this post, we will walk through the six essential steps to clean your data and explore how to ensure data accuracy through cleaning.

Why Data Cleaning Matters

Clean data is the foundation of reliable analysis, accurate reporting, and smart decision-making. Raw data is often riddled with inconsistencies, missing fields, duplicates, and other errors that can skew results. If left unchecked, these issues reduce the value of your insights and compromise your business strategy.

Data cleaning helps to:

- Improve data accuracy and consistency

- Eliminate duplicates and irrelevant records

- Ensure better compliance with regulations

- Enhance machine learning model performance

- Support better decision-making

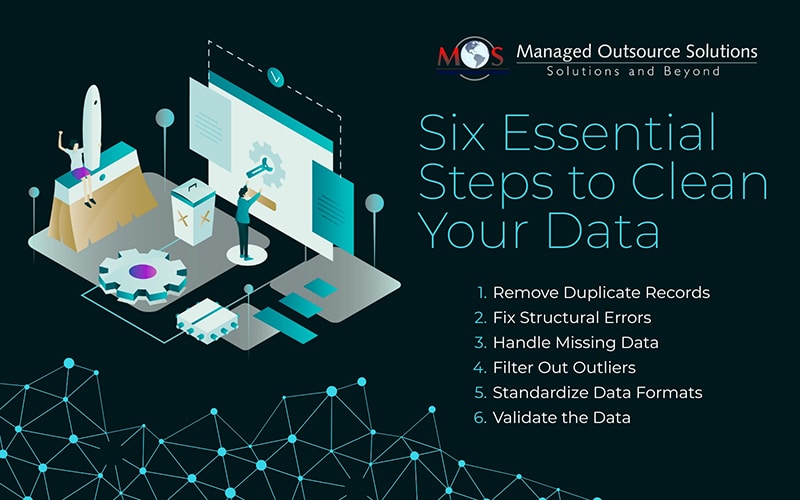

Essential Steps to Clean Your Data

Below discussed are six essential steps to clean your data effectively –

Step 1:

Remove Duplicate Records: Duplicate data is one of the most common issues in large datasets. It often occurs due to system errors, repeated entries, or integration from multiple sources. Duplicate entries can inflate metrics and mislead analysis. Use automated tools or scripts to identify and delete repeated rows based on key identifiers such as customer ID, email, or transaction number.

Step 2:

Fix Structural Errors: Structural errors refer to issues in the way data is formatted, named, or categorized. These can include inconsistent naming conventions (e.g. “NYC” vs. “New York City”), typos, or misplaced data fields. Structural inconsistencies make data harder to aggregate and analyze. Fixing them early helps streamline processing and improve reporting accuracy. Tools such as regular expressions, spreadsheet functions, or data wrangling libraries in Python or R can be used to fix structural errors.

Step 3:

Handle Missing Data: Missing data handling is a crucial aspect of data cleaning. Missing values can occur for many reasons such as data entry mistakes, software errors, or unavailable information. Depending on the amount and nature of missing data, you have several options:

- Remove rows or columns with too many missing values

- Impute values using the mean, median, or a predictive model

- Leave them as-is, if they won’t impact the outcome significantly

Always assess how missing data could affect your analysis before choosing a strategy.

Step 4:

Filter Out Outliers: Outliers are data points that fall far outside the normal range. While some outliers are valid and important, others can be the result of errors. Outliers can skew average values and mislead forecasting models. Data validation techniques such as Z-score analysis or IQR (Interquartile Range) methods help you detect and decide whether to exclude or further investigate outliers.

Step 5:

Standardize Data Formats: Inconsistent date formats, currency symbols, measurement units, or text casing can wreak havoc in your analysis. Standardization ensures that all values follow the same format. Key duplicates examples include:

- Using YYYY-MM-DD for dates

- Converting all monetary values to a single currency

- Applying consistent units of measurement (e.g. meters vs. feet)

- Using lower case or title case uniformly for text entries

Standardization ensures clean merges and comparisons across datasets.

Step 6:

Validate the Data: Data validation is the process of ensuring that the data meets specific criteria or business rules. This includes verifying:

- Correct data types (e.g. numbers, text, date

- Expected value ranges (e.g. age cannot be negative)

- Referential integrity (e.g.)) QQ valid customer IDs)

- Completeness and uniqueness constraints

Validation helps you detect incorrect entries and maintain data reliability. Automating this process through validation scripts or ETL tools can save time and ensure consistency.

How to Ensure Data Accuracy through Cleaning

Data accuracy doesn’t happen by chance, rather it is the result of a disciplined and repeatable cleaning process. Here is how to make sure your data remains accurate:

- Establish data governance policies – Define naming conventions, data types, and formatting standards.

- Use automation tools – Tools like Python (Pandas), R (tidyverse), OpenRefine, and data quality platforms can speed up cleaning and reduce manual error.

- Monitor your data sources – Identify and address issues at the source to prevent recurring problems.

- Conduct regular audits – Periodically review and clean datasets to keep them accurate over time.

- Document everything – Maintain a log of changes, transformations, and cleaning decisions for accountability and repeatability.

To dive deeper into how structured data cleaning powers smarter decisions, check out our post on – “Navigating the Steps to Successful Data Cleansing”

In today’s data-driven world, dirty data is not just a nuisance – it’s a liability. Following the above mentioned six essential steps to clean your data – right from removing to conducting data validation – helps ensure that your datasets are accurate, reliable, and analysis-ready. Never overlook the importance of missing data handling, standardization, and validation; they are critical for learning how to ensure data accuracy through cleaning. By investing time and resources in proper data cleaning, businesses can unlock better insights, improve forecasting, and gain a true competitive edge.

Don’t let bad data hold your business back.

Contact our experts to learn how data validation and cleaning can transform your decision-making process.